There's a fundamental difference between adding AI features to an existing application and building an application with AI as a core architectural component. I specialize in the latter—AI-native applications where intelligent processing isn't an afterthought but the foundation. These applications leverage LLMs, embeddings, and machine learning throughout their design, resulting in capabilities that traditional software simply can't match.

What "AI-Native" Actually Means

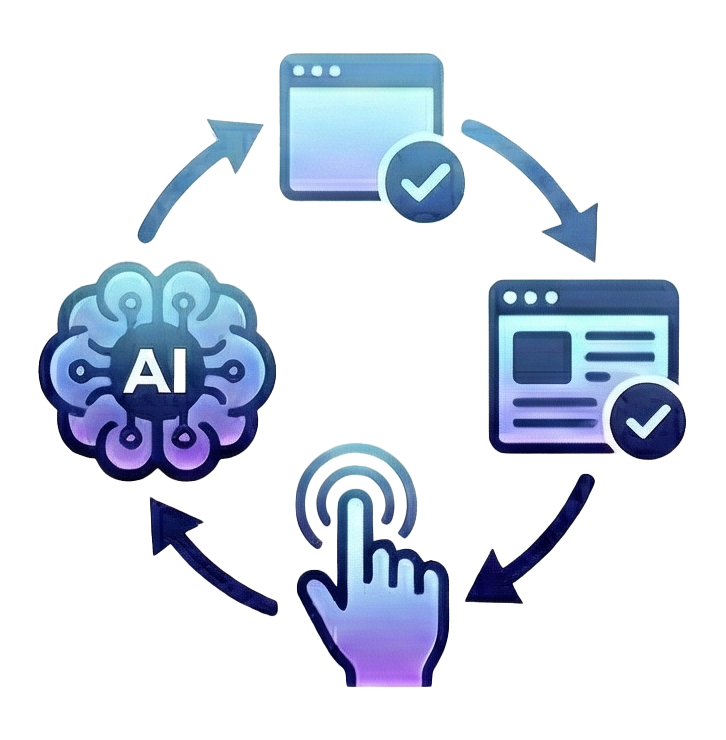

An AI-native application treats intelligence as a first-class concern from day one. Instead of adding a chatbot to an existing system, you design the system around what AI can do. Data flows are structured for ML consumption. Interfaces expect natural language. The architecture assumes that some operations will be probabilistic rather than deterministic.

This approach unlocks capabilities that bolt-on AI can't achieve:

- Semantic understanding of unstructured data throughout the pipeline

- Natural language interfaces that feel native, not awkward

- Intelligent defaults and suggestions based on context

- Graceful handling of ambiguity and uncertainty

- Continuous improvement as models and data evolve

Python-First Development

Python remains the backbone of AI development for good reason. Its ecosystem is unmatched—PyTorch, transformers, LangChain, FastAPI, pandas, and hundreds of other libraries that make AI development productive. I build all my AI-native applications in Python, leveraging this ecosystem to deliver quickly without sacrificing quality.

My standard tech stack includes:

- FastAPI for production-ready APIs with automatic documentation

- Pydantic for data validation and structured outputs

- SQLAlchemy or SQLModel for database operations

- LangChain for LLM orchestration when appropriate

- Vector databases (Chroma, Qdrant, or pgvector) for semantic search

"Production-ready doesn't mean overengineered. I build applications that are robust and maintainable without unnecessary complexity."

Intelligent Data Pipelines

Traditional ETL moves data from A to B with transformations in between. AI-native data pipelines add understanding to that process. As data flows through, it gets classified, enriched, summarized, and connected to related information automatically.

I build pipelines that:

- Extract meaning from unstructured documents—contracts, emails, support tickets, research papers

- Classify incoming data automatically based on learned patterns

- Enrich records with information derived from AI analysis

- Flag anomalies and outliers that deserve human attention

- Generate summaries and insights as data accumulates

The result is data that's not just stored but understood—ready for queries that would be impossible with traditional structured data alone.

Production-Ready APIs

An AI application is only useful if other systems can interact with it reliably. I build APIs that expose AI capabilities with the same rigor you'd expect from any production service: proper error handling, input validation, rate limiting, authentication, and comprehensive documentation.

My APIs return structured, predictable responses. Even when the underlying AI is probabilistic, the API contract is clear. This means your frontend developers, mobile apps, and integration partners know exactly what to expect.

I also design for observability. When something goes wrong—or just behaves unexpectedly—you have the logging and metrics to understand why. AI systems can be opaque; I work to make them as transparent as possible.

For enterprise environments, I also build with compliance and security requirements in mind from the start. That means proper authentication, audit logging, data handling policies, and testing practices including CI/CD integration. Enterprise SaaS applications need to meet standards that hobby projects don't—and my years in enterprise environments mean I build to those standards by default.

Architecture Patterns I Use

AI-native applications have their own architectural patterns. Some approaches I apply frequently:

Embedding-First Design

I structure data storage around embeddings from the start. Every piece of content gets embedded on ingestion, enabling semantic search and similarity operations throughout the application.

Async Processing for LLM Operations

LLM calls can be slow. I design applications to handle AI operations asynchronously, keeping interfaces responsive while heavy processing happens in the background.

Graceful Degradation

When the AI component fails or returns low-confidence results, the application should still function. I build fallback paths and confidence thresholds so users always have a workable experience.

Building Something Intelligent?

If you're planning an application where AI isn't just a feature but the foundation, I'd like to hear about it.

Let's Talk Architecture